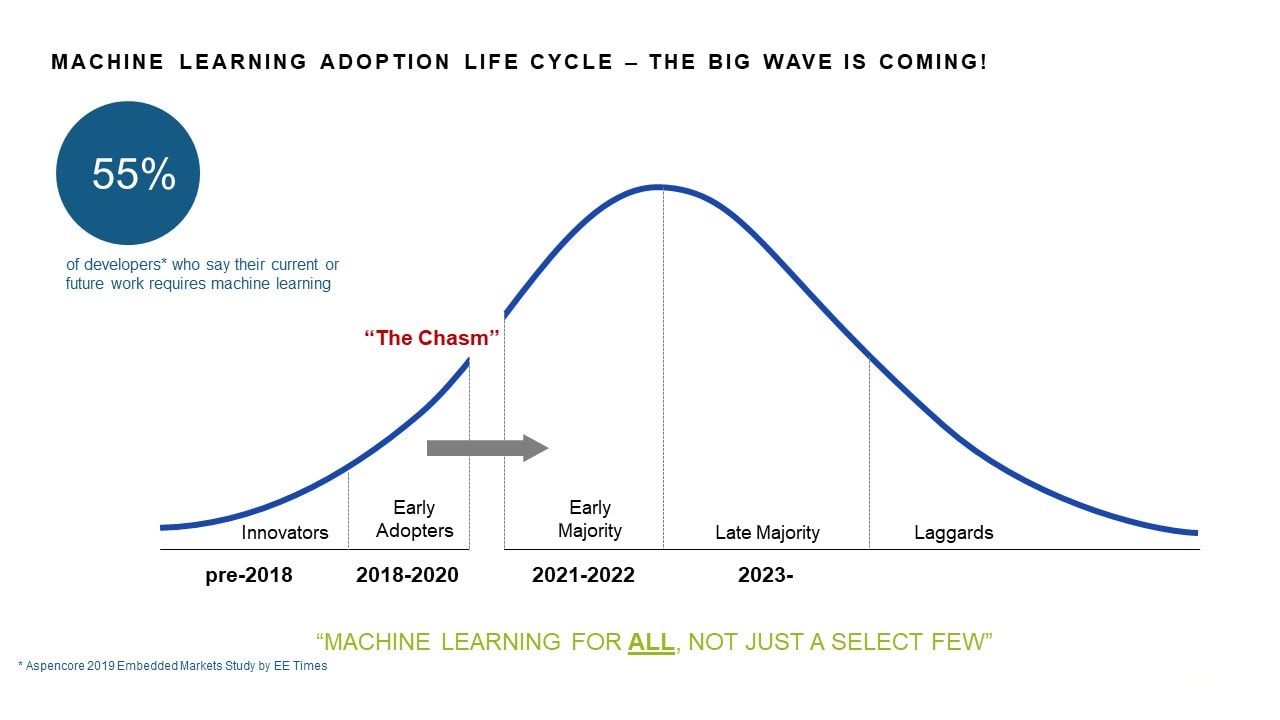

As with lots of brand-new complex modern technologies, artificial intelligence is following a fostering life process wherein ‘very early adopters’ have actually utilized this modern technology for the last few years. In a manner of speaking, they are ahead of the curve. Yet beginning in 2021, the adoption shift will certainly go toward the ‘very early bulk’ (Figure 1). Until now, NXP’s eIQ ™ machine learning (ML) software program advancement environment has actually effectively sustained the very early adopters, however as we cross ‘The Gorge’, artificial intelligence support should become a lot more extensive and easier-to-use. I’ll explain.

What Is a Very early Adopter?

Early adopters for machine learning go back greater than simply a couple of years– there have actually been individuals (and also firms) working with this innovation for decades. Nonetheless, the move to artificial intelligence at the side is a fairly current task as well as the needs are different. The devices are various. System sources are extra constricted. The applications are more receptive. The very early adopters have needed to figure out the entire procedure– from version training to implementation of the inference engine that runs the version, in addition to all various other facets of the system combination (i.e. the video clip pipeline from capture to output of the inference).

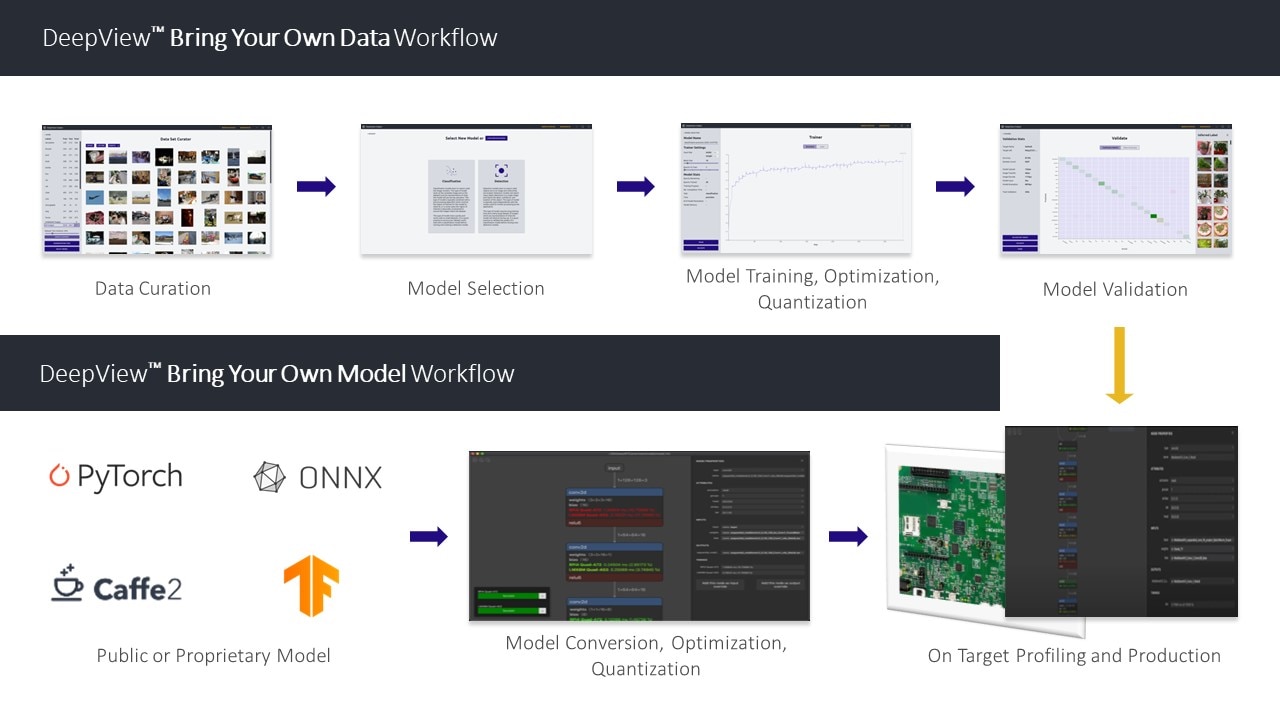

In the growth process, as soon as a version is educated, enhanced as well as quantized, the following stage includes deploying that model on the tool, enabling it to perform its inferencing feature. NXP supplied eIQ to sustain this process, incorporating all the software program items, application instances, individual overviews, and so on to enable the sophisticated developer to release a variety of open source reasoning engines on our devices. Today, eIQ has inferencing support for TensorFlow Lite, Arm NN, ONNX runtime and Radiance semantic network compiler. To utilize eIQ today, the programmer complies with a process we call ‘bring your very own model’, or BYOM. Develop your trained designs utilizing public or personal cloud-based tools, after that simply bring your designs right into the eIQ software application setting as well as plug it into the suitable inference engine.

Artificial intelligence for All

The 2019 Embedded Markets Research by EE Times suggested that 55% of designers stated their existing or future job calls for machine learning (I believe this number has actually risen ever since). To cross the gorge as well as allow machine learning for the majority of designers, machine learning support have to end up being more comprehensive and easier-to-use. Extra detailed assistance implies that NXP should give an end-to-end process, permitting programmers to generate their training data, choose the right version for their application, do version training, optimization and also quantization and ultimately perform on-target profiling and also subsequently relocate to manufacturing.

Easier-to-use is a matter of perspective, however, for bulk adoption this suggests that NXP has to give a simplified yet enhanced user interface. In theory, this would certainly make it possible for a device discovering advancement environment that might basically conceal the information, as well as with the click of a few alternatives, import the individual’s training data and release the model on the target gadget.

Crossing the Chasm with Au-Zone Technologies

To make this a truth and also cross that adopter gorge, NXP has actually made an investment in Canada-based Au-Zone Technologies, developing an unique, tactical partnership to broaden eIQ with simple ML devices and also increase its offering of silicon-optimized inference engines for Side ML. Au-Zone’s DeepView ™ ML Device Collection will augment eIQ with an instinctive, icon (GUI) and operations, making it possible for programmers of all experience degrees (e.g. ingrained developer, information researcher, ML expert) to import datasets and/or models, quickly train and also release NN designs and also ML workloads across the NXP Side handling portfolio.

The DeepView tool collection consists of a dataset office that will certainly provide programmers with a method to capture and also annotate pictures for version training as well as validation. Beginning by drawing a box around a things(s) of rate of interest and explain the product (search the Internet for the paid and free versions of devices to execute this) and from there you can start developing artificial intelligence datasets and also train designs. The office likewise supports dataset enhancement allowing programmers to quickly change the picture specifications to improve version training by decreasing over-fitting as well as raising robustness to dynamic real-world settings. For instance, dataset enhancement functions include picture rotation, blurring, color conversions, etc. Keep in mind, the NN model just ‘sees’ numbers, so any kind of variation on an input image will certainly look various. The even more variants you can provide on the original training information, the extra accurate your version will be.

The DeepViewML instructor permits developers to pick hyperparameters as well as click train. In most cases, you will not be educating your version from square one, so this DeepViewML trainer sustains transfer learning (i.e. customizing the last layers of the model). A model optimizer is additionally part of the DeepView ML device collection; it will certainly aid you adjust your design for the desired target platform and also reasoning engine. The optimizer supplies automated graph-level optimizations such as trimming, fusing and also folding layers to reduce complexity and also boost efficiency without loss of precision. Furthermore, optimizations that have precision tradeoffs are configurable by the developer. These consist of quantization, layer substitute as well as weight rounding. You’ll be able to validate lossy optimizations and contrast them making use of the validator device to fully recognize the effect (i.e. dimension versus accuracy).

Via this strategic collaboration, we’ll likewise be adding Au-Zone’s DeepView run-time inference engine to the eIQ family members; this will certainly complement the open resource reasoning innovations already deployed. An essential function of this DeepView engine is that it will optimize the system memory usage and information movement distinctively for each and every NXP SoC design. Customers will certainly after that have an extra selection of inference engines – the profiling tool will rapidly permit users to do performance as well as memory dimension contrasts on the different reasoning runtime alternatives.

If you haven’t currently joined the device learning movement, hopefully you’ll be crossing the gorge quickly as well as making the most of the extensive eIQ artificial intelligence (ML) software growth atmosphere, full with the DeepViewML Tool Collection as well as DeepView run-time reasoning engine, available in Q1, 2021.